One thing I love about current technology is that it’s really opened up the world of music making to anybody, even if they have a modest budget. Even a smart phone can be used to make music now, with hundreds of music apps available.

So that’s a great world to explore, and you don’t really need to understand how the software works to use it. But I find with most things, if you have an understanding of the technology that makes everything tick, you can do so much more.

So here’s the first of an occasional series on audio fundamentals. This starts with an exploration of the nature of sound and how sound can be represented electrically.

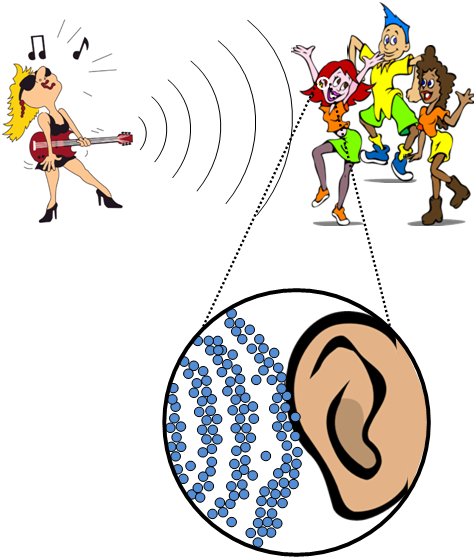

Sound is a phenomenon by which the vibration of physical objects causes sympathetic vibrations in the surrounding media. What does that mean exactly? Well, consider a note played on an electric guitar. A cone in the loudspeaker connected to the guitar vibrates rapidly back and forth when the note is played. This causes changes in air pressure around the cone, and these changes in pressure propagate outwards as waves. What’s actually happening here is that the molecules in the air are more densely packed together in the high pressure areas and less densely packed in the low pressure areas.

When these waves reach the ears of the people listening and dancing to the band, they cause the ear drums of those people to vibrate rapidly as the air pressure changes. The ear then sends this as an electrical impulse to the brain, which the brain interprets as the music the dancers are listening to.

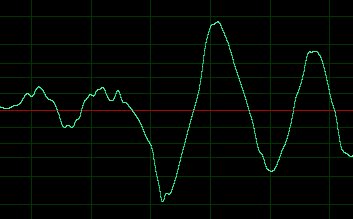

If you were to draw a graph showing the amount of vibration in the air on the y-axis against time on the x-axis, you would see a representation of a sound wave, like this:

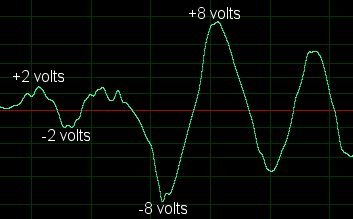

If you want to record the sound, so you can reproduce it later, you can do so with a microphone. A microphone works very much like an ear drum. It has a sensitive diaphragm that moves back and forth in time with the pressure changes in the air. These movements are converted into a changing voltage in an electrical circuit. If, for example, you had a circuit that had a range of + or – 10 volts, then a quiet sound might oscillate the circuit between -2 and +2 volts, while a louder sound might move it between -8 and +8 volts. If you represented the voltage changes in the circuit on the y-axis of a graph against time on the x-axis, you’d get something very familiar:

That voltage could then be passed through an amplifier to a speaker. This would cause the cone to vibrate back and forth, resulting in changes in air pressure and beginning the whole cycle once again.

If you wanted to preserve the sound, the voltage could be turned into a changing magnetic field on a length of audio tape. At a later point, this magnetic field could be turned back into a voltage in a circuit to reproduce the sound.

These types of circuits and mechanisms like audio tape are known as analogue devices, because they represent an analogue of the sound as a continuously variable form. In recent years analogue means of representing sound have largely given way to digital representations, so I’ll look at that in the next post in this series.

Be First to Comment